Quantum computing: Not even close

What quantum computing needs to be real is almost entirely in PowerPoint at this time. By one estimate, the market could be worth four billion dollars by 2030. But the road there lies through the same challenge that has always been the case for electronics, and that is even less certain for quantum, the “scaling” challenge.

“At any rate, it seems that the laws of physics present no barrier to reducing the size of computers until bits are the size of atoms, and quantum behavior holds dominant sway.”

— Richard Feynman, Quantum Mechanical Computers, 1985.

“This computer seems to be very delicate and these imperfections may produce considerable havoc.”

— Richard Feynman, Quantum Mechanical Computers, 1985.

Illustration: ‘Number 24,’ Hilma af Klint, 1919

Enthusiasm for quantum computing comes and goes — this year, it’s way up. Stocks of the most prominent names, IonQ, D-Wave Quantum, and Rigetti Computing, have, on average, doubled in price.

But, the fundamental challenge for all quantum companies hasn’t changed in two decades. They are all trying to get to “scale,” and it’s not clear when, if ever, any of them will.

I’m emphasizing this point because the scaling issue is fundamental to all computing; without scaling, nothing is meaningful.

Scaling means that as a computer gets more complex — basically, bigger — the amount of work the computer can do increases in proportion. The main example is the integrated circuit, which became more and more powerful over six decades, increasing dramatically what could be done, to the point that you now have a supercomputer in your pocket with today’s phones.

Quantum is not there yet. All the companies have shown interesting machines, but none have proven they can scale those machines the way the traditional semiconductor scaled.

THE QUICK GLOSS ON QUANTUM VERSUS TRADITIONAL COMPUTING

Quantum computing is based on the same principle as traditional computing, or even an abacus for that matter, which is that if you can manipulate some object, you can use it as a symbol to represent counting, and, from there, you can conduct increasingly complex calculations using that symbol system.

In traditional computing, you use a transistor to permit or block the flow of a current of electricity, and that lets you represent, respectively, a 1 or a 0. Performing arithmetic on 1s and 0s, you can represent any kind of logic, and therefore anything you want to calculate.

With quantum, instead of manipulating an electrical current, one manipulates a single particle, such as an atom, electron, photon, or a sub-atomic particle such as a quark. Unlike a flow of current, which has only one of two possible values at a time, 0 or 1, an atom or other particle is not in either state until it is measured to determine its finite value.

Until it is measured, until the world of classical physics flattens everything to either/or, the atom or the photon has an infinite number of possible states. To visualize this, scientists will use the mental construct of a point on the surface of the earth at the intersection of longitude and latitude. The point could be anywhere.

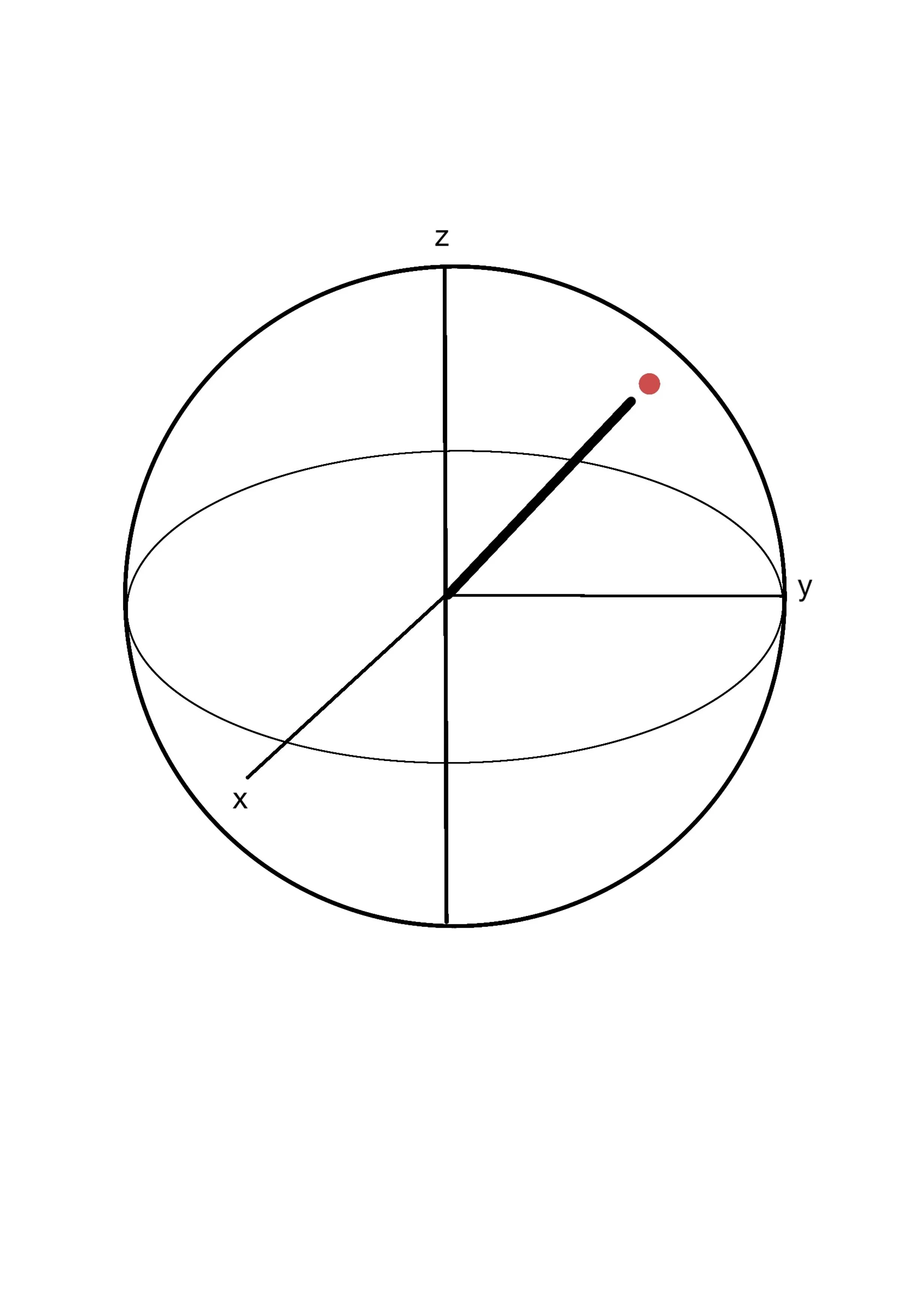

The Bloch Sphere is an intellectual construct representing the probabilities of particles being at a point in space and time.

The formal mathematical version of that is an abstract sphere called a “Bloch Sphere,” named for physicist Felix Bloch. The particle can have any value on the surface of the Bloch Sphere specified by the coordinates of three axes, x, y, and z.

This is not a real object in the world, it’s just a mathematical construct to help one think about the infinite possibilities of finding a particle somewhere in space and time

What’s important is, using some device, a laser, or radio waves, or some other tool, we can “trap” the particle, and then we can manipulate the particle, making it move through that infinite variety of states represented by the points on the Bloch Sphere.

When the particle is manipulated in that way, we have an analog to the transistor known as a quantum bit, a “qubit,” but one that is rich with possibility.

The potential of quantum computing is in how many values can be represented by this qubit versus a transistor. The transistor can only be a 1 or a 0. That means as you increase the combinations of 1s and 0s that you have to count, you have to perform more and more operations. The problem can become intractable as you get to very large numbers of calculations.

In contrast, a group of multiple qubits can, in theory, simultaneously represent all the possible combinations of 1s and 0s you need. That phenomenon, known as a “superposition,” would allow you in one fell swoop to compute all the different values that it takes a regular computer multiple operations to carry out.

That is the source of the fabled exponential speed-up in quantum computing.

There are a couple of problems, though — a big problem and a slightly smaller one.

The big problem is that it’s simply not true that every problem gets exponentially faster with qubits versus transistors.

As Scott Aaronson, a computational complexity scholar at University of Texas at Austin, has pointed out, it will depend on the problem. Running all the values of a problem faster than a traditional machine might still yield a wrong answer and still take a lot of time. The proverbial needle-in-a-haystack is still an enormous problem that might not yield the needle even with a faster search tool such as a quantum computer.

Aaronson’s excellent blog has regular reminders of the puffery of dramatic quantum speed-up claims. A recent post about a questionable claim of quantum “advantage” from HSBC drew the delicious quip from Aaronson, “There are more red flags here than in a People’s Liberation Army parade.”

Aaronson has called these irresponsible headlines “qombies,” meaning, “undead narratives of ‘quantum advantage for important business problems’ detached from any serious underlying truth-claim.”

(For in-depth discussion of the matter, see Aaronson’s classic blog post from 2007, or one of his more dense papers on the topic. Or buy his book, Quantum Computing Since Democritus.)

The fact is that we are only learning, as quantum computers get built, which kinds of problems will in practical terms display a tremendous speed-up when they are done “quantumly,” if you will. The true promise of quantum is still being found out.

A smaller but still significant problem is that in order to do anything, you need lots of qubits on which to operate.

A programmer who wants to create an algorithm that does some useful work won’t be running a single operation once and that’s it. Instead, they must chain together multiple groups of interacting qubits in various “logic gates” to create what’s called a circuit. Ultimately, then, you have to scale up the qubits.

The ability to “scale up” qubits is more complicated than making more of the same thing. The kinds of qubits one can manufacture can be more or less reliable. Qubits interact with aspects of the environment such as cosmic rays. And as they do so, the superposition that is so important degrades as a result. Every qubit has to last long enough to carry out the quantum operation before it degrades, an aspect known as the “coherence” time of a qubit, the brief span of time in which it is usable.

And so, every quantum machine has not only to scale qubits, but also to do so in a way that leads to quality qubits that last long enough for the quantum operations to be carried out. That quality challenge is generally referred to as “fidelity.”

Let’s throw in another complication. You not only have to increase your qubits, and to do so with fidelity, you need to measure out the result of any qubit interactions to yield the 1s and 0s that we can use in the “real world,” where all our data lives. And we may need to measure many times in succession because we may want to get an output from a quantum circuit and then put it into another quantum circuit, and that might require going into the realm of the real world in-between. If you want to compute “Big Data,” you need a way to get Big Data in and out of the machine.

And so the problem is not merely that of building a machine with a lot of qubits of high fidelity, but also building a machine that can have many inputs and outputs between and among circuits of qubits, an extra layer of complexity. I haven’t even mentioned the problem of “quantum memory,” which would be a memory chip analogous to SRAM or DRAM that would store qubits for later use. That is itself an emerging area of technological experimentation.

And, so, leaving aside the deep theoretical questions of Aaronson and other scholars, everything comes back to that simpler problem of scale, getting enough quality qubits so that you can create sufficiently complex circuits so that you can do proportionately greater work, plus the task of joining those qubit circuits to input and output in order to connect quantum circuits to the real world of electronics.

THE SCALE ROADMAP

Today’s quantum computing machines are not there yet. Most have tens of qubits or perhaps a hundred or so. The Street knows this. Tucked inside optimistic reports on quantum are caveats that are briefly passed over but that are in fact the whole story.

Merrill Lynch’s Wamsi Mohan last week offered a “deep dive” on quantum computing. His financial model imagines a development from almost no industry revenue at the moment to lots in five years.

“We estimate that the quantum computing market in 2024 was worth ~$300 million and by 2030, we expect the market to reach ~$4 billion.”

There is lots of detail in Mohan’s report, but a fundamental fact is acknowledged up-front. “While the promise of quantum computing is real, there are technological impediments to scaling that are currently being worked on,” writes Mohan.

“Once these scaling hurdles are overcome (perhaps by 2030), we expect a much more meaningful inflection in revenues.”

Mohan sounds optimistic, but the problem is tricky.

Scaling unfolds in two ways: the underlying “hardware” and the “logical” structure of qubits that are usable.

For every qubit that is built, what is known in the trade as a “physical” qubit, you may not get a successful measurement. That means that you need multiple physical qubits to yield a clean result for any operation, a “logical” qubit. Hence, what’s actually usable today is even less helpful than it appears.

How many qubits are enough? That is itself an area of expanding research. The answer seems to depend, again, on what kind of problem you are trying to solve and by what kind of algorithm. There is no clear answer yet as to what would be a terrific optimal number of qubits, gates, or circuits.

In the breach, lots of promises abound from vendors building machines that they will achieve hundreds, thousands, millions of qubits, some years in the future. The promises exist only in PowerPoint slides.

Here, a big picture is nicely provided by Olivier Ezratty, a consultant and speaker for hire about quantum computing who is often consulted by brokerages trying to handicap the market.

Ezratty has written a lovely book that he has updated over the years very assiduously, and that you can download for free, and which covers exhaustively every aspect. Be warned, the full version is 1,512 pages! There is, however, a summary version from the same download link that is just thirty-six pages.

A couple of Ezratty’s charts are simply marvelous in understanding the lay of the land. They show how the different vendors are attempting to scale their numbers of qubits over time:

Source: Olivier Ezratty, Understanding Quantum Technologies

Source: Olivier Ezratty, Understanding Quantum Technologies

(On a related note, Ezratty has a wonderful table of the ten worst myths about quantum computing that all ignore the complexity of quantum circuits, which is reprinted at the bottom of this post.)

As useful as these graphics are, again, the devil is in the details of how well any collection of qubits and gates will map to problems to produce a significant advantage over classical computing. What’s not shown is what we will learn if and when those qubits come to market.

Put another way, scaling is not guaranteed to be linear by simply turning a dial and increasing the qubits — whether or not a vendor even has such a dial to reliably turn.

There are challenges on the way to making the qubits even when the path taken is as straightforward as can be.

I wrote recently about the “analyst day” presentation of IonQ, which appears to have the most revenue so far of any company making quantum machines.

The company is throwing all its weight behind semiconductor engineering as the thing that is going to allow IonQ to build and maintain an edge on the other two, Rigetti and D-Wave, but also on IBM and Alphabet and Amazon and Microsoft and startups such as Quantinuum, and all other challengers. As I wrote in that piece, it’s not a slam dunk for IonQ to build bigger and bigger chips, even though the company plans to leverage the semiconductor manufacturing supply chain.

Once you’ve got the scaling challenge front and center, there’s a second big challenge for every vendor: incumbency.

While the startups all work out their qubit roadmaps, Nvidia’s GPUs get better and better, enabling more and more power. While waiting for enough qubits to do real stuff, the market can be lured away to GPUs that really do stuff. In fact, artificial intelligence running on top-of-the-line systems have a lot of potential to solve via classical means problems that might have been intractable until now. It’s a race, in a sense, and it’s not clear quantum will scale fast enough to creat a sufficient gap with GPUs to be attractive.

A secondary problem for quantum within the incumbency problem is that the vast amounts of funding being devoted to running GPUs by OpenAI, by Oracle, and by many others, means that big money for GPUs could dominate budgets, leaving a lot less to spend on quantum. It is what’s known as market “crowding,” pulling away resources that could go to developing quantum.

Incidentally, quantum is a great way to sell more GPUs. For example, in June, in a paper not yet peer reviewed, IonQ researchers performed a complex experiment in chemistry in partnership with Nvidia, Amazon’s AWS cloud service, and drug giant AstraZeneca.

There aren’t enough qubits to do the whole experiment. Instead, the quantum machine was used to prepare an arrangement of a handful of qubits as a starting point, the initial state of the experiment. Most of the actual computation was done by Nvidia GPU chips. In fact, a million GPU “cores” were needed, which were procured from Amazon AWS instances of GPU clusters.

At that rate, Nvidia stands to make a bundle every time someone uses a limited quantum computer to prove how much can be done with quantum.

Another great sales pitch for plain old computing is the simulation of quantum. In order for scientists and programmers to start to practice making algorithms before the fully scaled quantum computers are here, they need something to practice on. You can practice by recreating what a quantum computer would be like in software running on a traditional computer. But, to do so, you have to have a big traditional machine to equal the complex probabilistic numbers of each of your qubits. Again, that starts to balloon the spending on conventional computing.

It’s no wonder that Nvidia CEO Jensen Huang has lately become such a fan of quantum: he can exploit quantum enthusiasm to sell lots of GPUs. In the scaling race, my money is still on Huang.

The best hope for the quantum industry is a kind of harmony of the two, where quantum companies can use what few qubits they have to serve as an adjunct to GPUs. That, again, depends on finding the right kinds of problems for which some real quantum speed-up now would be sufficiently meaningful to propel quantum sales. And that’s possible but not certain.

I think you can see there are lots of obstacles to building machines and building business plans. It’s not as simple as increasing what has already been done by the various vendors.

The two quotes from the late physicist Richard Feynman that I placed up top, from a seminal 1985 article in which he provided the first clear perspective on quantum computers, really encapsulate the present situation.

On the one hand, the opportunity to make atomic computers is firmly grounded in scientific exploration. On the other hand, to build such a machine in practical terms is full of difficult issues.

Without cracking the matter of scale, today’s industry is not even close to achieving the promise of quantum computing.

THE STOCKS

After the substantial increases this year, the stocks have preposterous valuations. They trade for an average of two hundred and sixty times next year’s projected revenue. The stocks have no support in terms of valuation.

The entire stock proposition rests on the momentum resulting from headlines that are positive or else estimates that will meaningfully inflect from where they are now.

On the second score, past history doesn’t bode well. Estimates for all three companies for annual revenue have been flat or declining for two years. At this time in 2023, IonQ was expected to make $87.5 million in revenue this year. The projection now is only slightly higher, $91.3 million. D-Wave back then was projected to make $59.3 million this year, but now the estimate is $24.6 million. And Rigetti was thought back then to be making $30.2 million in 2025; now that figure is $8.2 million.

Based on the challenges identified above, it’s unlikely the companies are on the threshold of a dramatic turn in their financial profile. That means the payoff for investors will rest a lot on on how the headlines unfold.◆

Source: Olivier Ezratty, Understanding Quantum Technologies

Disclosure: Tiernan Ray owns no stock in anything that he writes about, and there is no business relationship between Tiernan Ray LLC, the publisher of The Technology Letter, and any of the companies covered. See here for further details.